When Fairness Meets Kindness: How ChatGPT Helped Me Refine My Own Reasoning

Recently, I found myself in a peculiar workplace dilemma.

I had once taken leave, only to be met with my colleague’s insistence that I return at the earliest. At the time, I asked him why he could not be more considerate, but I complied and came back quickly.

Fast forward: the tables turned, and he fell ill. This time, I suggested that he too should return early. His response was swift:

“What happened to your kindness preach?”

It was, in a way, an uncomfortably fair counter. I realised I was both right and wrong at the same time. Unsure of how to make sense of it, I brought the situation to ChatGPT.

—

ChatGPT’s Perspective

ChatGPT described my predicament as an ethical symmetry trap — a scenario where two moral frameworks collide:

Fairness / Reciprocity: Applying the same standard to someone that they applied to you — a tit-for-tat logic that enforces equality.

Kindness / Moral Consistency: Acting according to one’s professed values, regardless of how the other party behaved.

The conflict, it explained, stems from cognitive dissonance — the psychological discomfort that arises when actions contradict previously stated beliefs or principles.

—

Why This Happens

According to ChatGPT, I had operated under two different yardsticks at different times:

1. When I needed leave, I invoked the principle of compassion.

2. When my colleague needed leave, I applied reciprocity instead.

Both are logically defensible, but not simultaneously compatible. One satisfies logical symmetry, the other moral alignment — and my discomfort was the mental friction between the two.

—

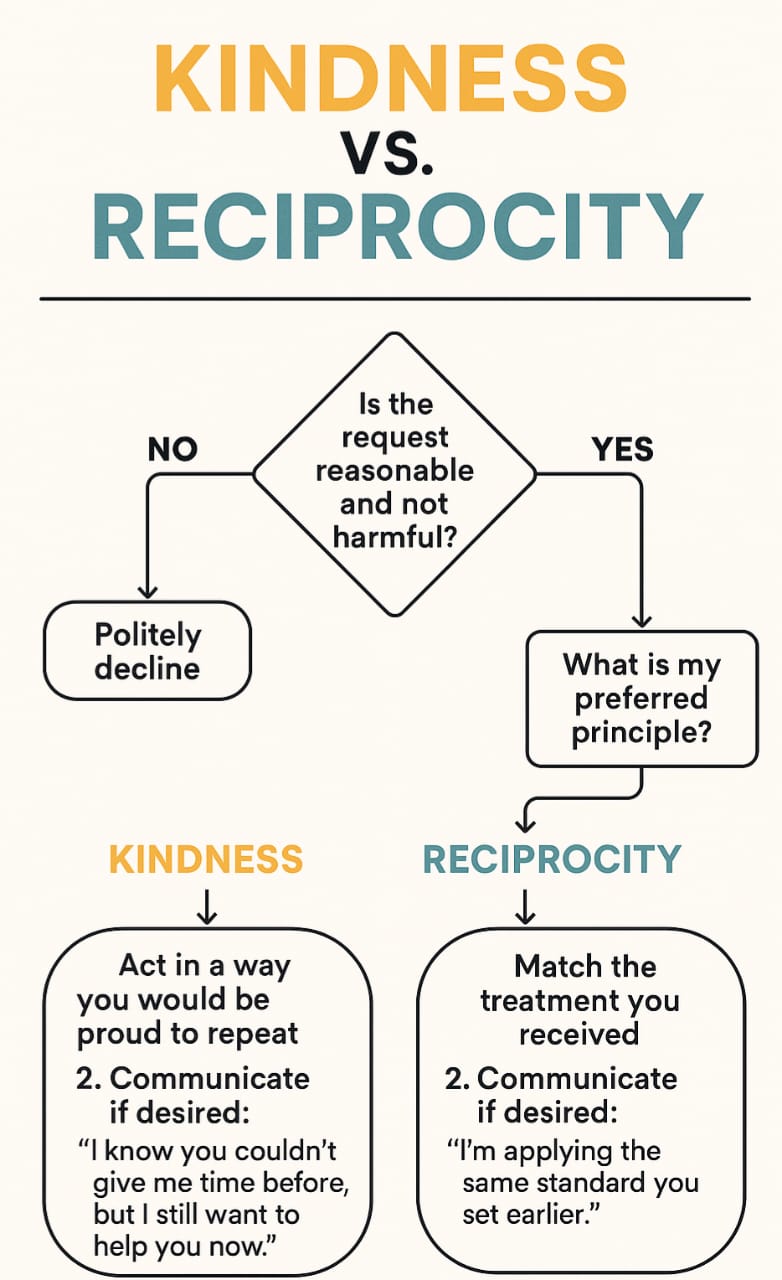

The Framework ChatGPT Suggested

To navigate future situations, ChatGPT offered a decision-making flow:

—

The “In the Moment” Test

ChatGPT also gave me a quick rule for future dilemmas:

> If this were reversed tomorrow, would I want my present choice to be the rule?

If the answer is yes, the decision likely aligns with both fairness and your personal values.

—

My Takeaway

This exchange showed me that moral dilemmas are not always about “right” versus “wrong” — sometimes they are value-versus-value conflicts. In those moments, the goal is not to find a flawless answer, but to choose a framework consciously, apply it consistently, and live with the outcome.